This week we investigate a new way of broadcasting sound that may allow us to be the only one to hear sounds intended only for us. We examine a new design for a lightsail which is currently the most likely way to power missions to investigate nearby stars. We discover a new platform that allows us to use text prompts to design and print small personal robots. Finally we learn about a new machine that will tell us which tuna fish will make the best sashimi and sushi.

Private Listening

A team at Penn state have made a breakthrough that may one day allow us to have a small zone where only we could hear the sounds being broadcast. No headphones required. Called Audible Enclaves you can hear the sound but nobody nearby can hear.

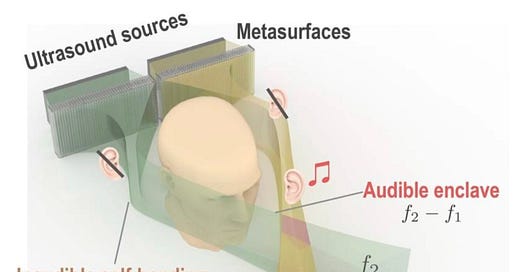

The team were able to generate two nonlinear ultrasonic beams of sound, where the sound can only be heard at the intersection of the beams. No matter where anyone else is standing they can not hear the sound.

Two ultrasonic transducers paired with an acoustic meta surface were used. Each transducer emits an ultrasonic sound wave at slightly different frequencies. The meta surface, placed in front of the two transducers bends the direction of the sound. The sound can be heard at the point where the two waves intersect and nowhere else.

The beams can bypass obstacles like a human head to reach the desired intersection point. The team tested the system in a range of environments such as classrooms, vehicles and outdoors.

Currently the team can remotely transfer sound from about a meter away from the intended target at a volume of 60 decibels (normal speaking level). The team is working on increasing the distance and volume of the sound by increasing the ultrasound intensity.

Lightsails

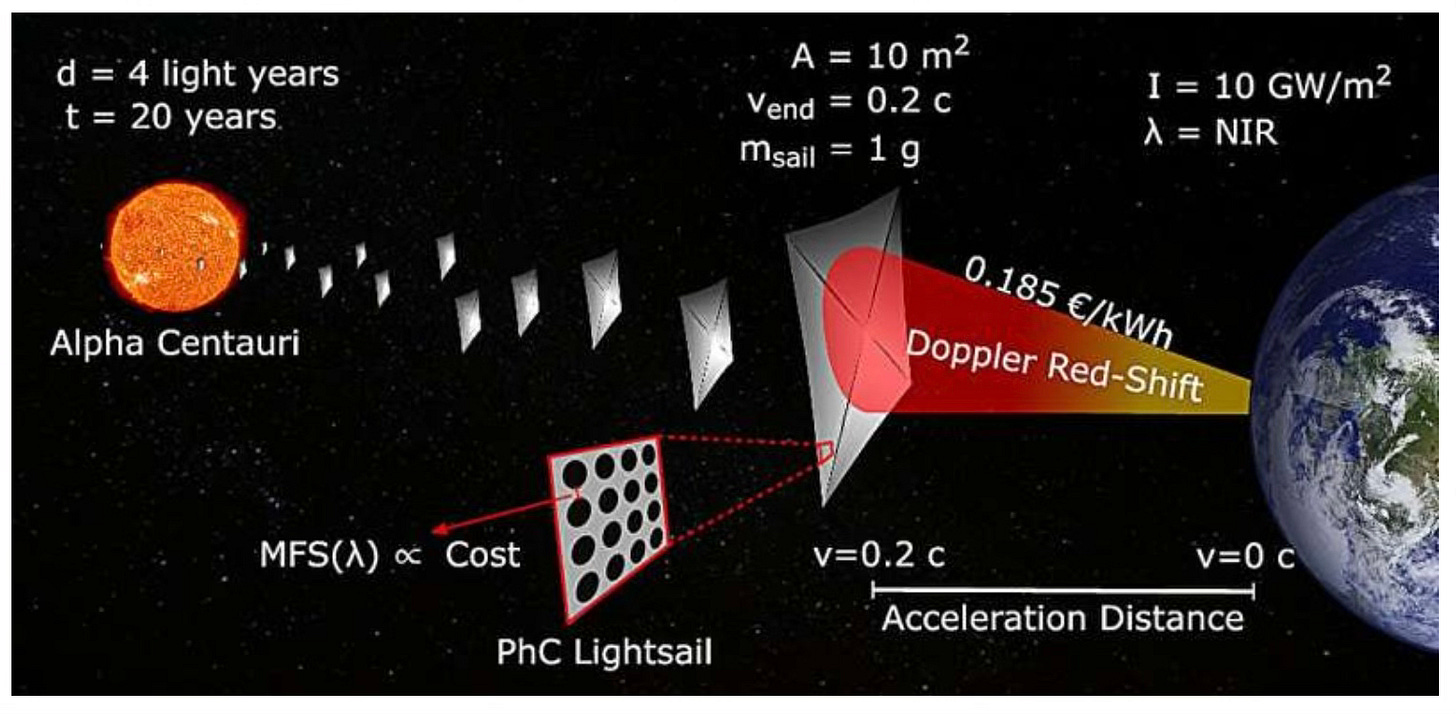

One of the ways that we may be able to send small probes to our nearby stars is by using lightsails to power the probes. A light sail is a thin, reflective membrane that can be pushed by light in a similar way that a wind pushes a sailboat.

Voyager 1 was launched in 1977 and so far has travelled 15 billion miles into space. Whilst that is a long way, it is less than 1% of the distance to our nearest star, Alpha Centauri. We will have to make our spaceships a lot faster if we are going to reach the stars in our lifetimes. It is thought that lightsails could reduce the flight time from several thousand years to perhaps a decade or two.

A team at Brown University (US) and Deft University of Technology (Netherlands) have developed a way of designing and fabrication the ultra light materials for lightsails. Each membrane is 60 millimeters long and wide but only 200 nanometers thick (a tiny fraction of a human hair).

The surface is patterned with billions of nanoscale holes which reduce the weight and increase the reflectivity of the material. The reflectivity of the surface determines how much light pressure is created behind the sail. The more reflective the material the more acceleration potential. A lighter material requires less force to accelerate. Less mass = more speed.

The team used a new AI model to optimize the shape and placement of the holes for the increased reflectivity and lighter weight. A new gas based etcher allows the delicate removal of material from beneath the sail leaving a strong robust sail. Once suspended the sail is unlikely to break in space.

These new methods reduced the time taken to make the sail from 15 years to about a day and the cost is thousands of times less expensive. The goal is to have ground based lasers power hundreds of meter scale lightsails carrying microchip sized probes to the stars. We are on the verge of solving space engineering problems that have been unsolvable to now.

Text to Robot

When personal computers first came out, a significant level of programming was required to be able to operate them. Now anyone can operate a personal computer at a useful level. Robotics is currently a domain for experts with the appropriate programming knowledge. Text2Robot is seeking to change that.

At team at Duke University in North Carolina have developed a computational robot design framework that allows anyone to design and build a robot by simply typing a few words. All you need to do is type what it should look like and how it should function.

Test2Robot uses emerging AI technologies to convert user text descriptions into physical robots. The basic body design comes from a text to 3D generative model which creates a 3D physical design of the robot’s body based upon the description (word of warning here, as people are discovering with GPT AI models, there is a significant degree of skill in being able to prompt the AI, that will get easier over time but not yet).

This basic design is converted into a moving robot model capable of carrying out tasks by incorporating real world manufacturing constraints such as the placement of electronic components and the functionality and placement to joints. The system uses evolutionary algorithms and reinforcement learning to optimize the robots shape, movement ability and control software. The AI understands the physics and biomechanics required.

If a user types “an energy efficient walking robot that looks like a dog”. The system generates a manufacturable robot design within minutes. It has a walking simulation within an hour and in less than a day, the user can 3D print, assemble and watch their robot come to life.

Text2Robot allows us to bridge the gap between our imaginations and reality. Children could design their own pets or interactive art sculptures (something new for you to keep on the fridge door). We could design and build robots for the home.

The framework currently focuses on quadrupedal robots however this will be expanded into a broader range of robot forms and automated assembly processes. Current robots are limited to basic tasks such as walking however the future addition of sensors and other technologies will expand capabilities.

How do we know which piece of tuna will be the tastiest?

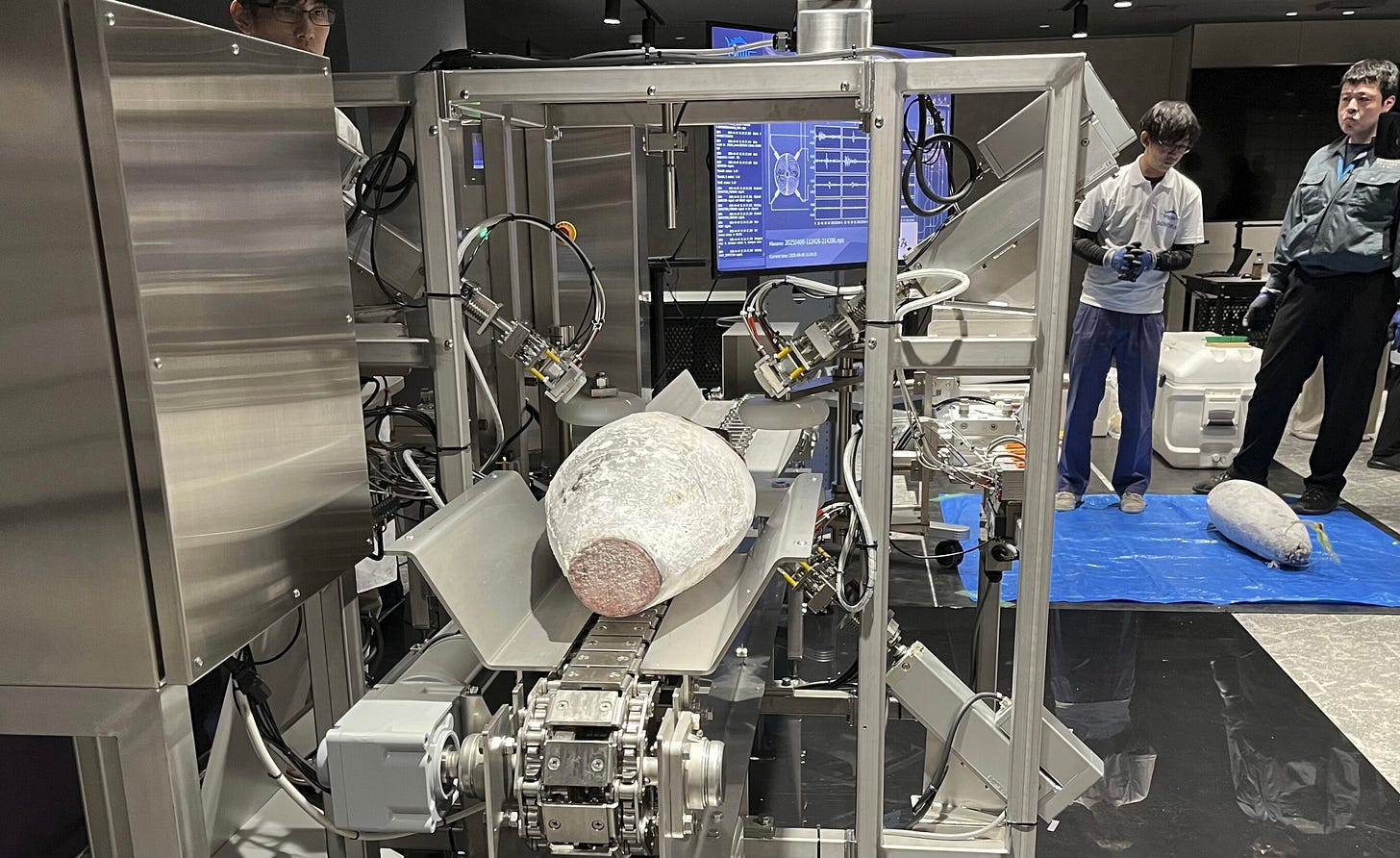

The fatty marbling in tuna used in sashimi and sushi is what makes it tasty. The industry uses the fish’s level of fattiness to judge quality and pricing. Currently skilled individuals assess how fatty a tuna is by cutting the tail with a saw like knife. This takes about a minute. A significant amount of time and cost for large batches of fish.

A new machine called Sonofai which can be operated by an unskilled technician uses ultrasound waves to assess the fattiness in about 12 seconds. A conveyor belt transports a whole frozen tuna fish (about a meter long) into a machine that beams ultrasound waves. Sensors pick up the waves and draw a zigzagging diagram to indicate the fish’s fattiness.

Fatty meat absorbs fewer sound waves than lean meat. An AI sorts real data from misleading noise and irregularities. The system is safer, more sanitary and efficient.

The system is likely to be used by fish processing outlets and fishing organizations. The developer Fujitsu will put the machine on sale in Japan in June this year for 30 million yen (approx US$200,000). Further upgrades will test for freshness, firmness and other desirable characteristics of tuna and other fish.

Paying it Forward

If you have a start-up or know of a start-up that has a product ready for market please let me know. I would be happy to have a look and feature the startup in this newsletter. Also if any startups need introductions please get in touch and I will help where I can.

If you have any questions or comments please comment below.

I would also appreciate it if you could forward this newsletter to anyone that you think might be interested or provide a recommendation on Substack.